Contents

Introduction

Unless we’re working with video feeds that are streamed directly or have hard real-time requirements, chances are high that some sort of http or gPRC based API handles requests to other microservices that actually run an inference session. This might be something like an openai api-compatible, something like the triton inference server or something custom.

Unfortunately, not all endpoints support gRPC and therefore a low latency, high throughput solution is require for http requests. If e.g. fastapi is used to build such an API that forwards requests via http, then we’re basically locked into async and therefore httpx or aiohttp. However, I observed some weird behavior of httpx when using https/2 that made me believe that it might not be completely async and there are still some parts that are actually blocking.

Benchmark results and conclusions

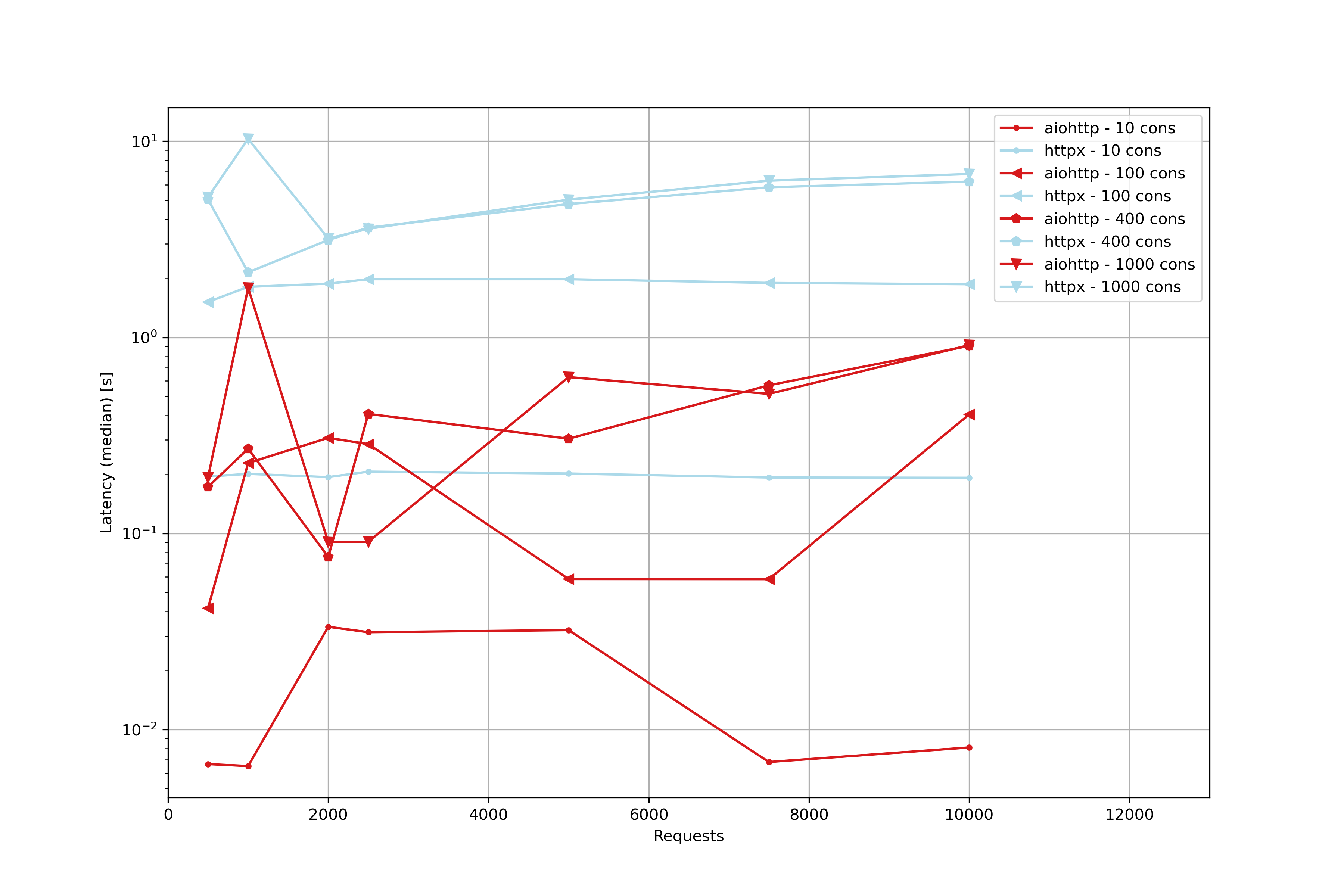

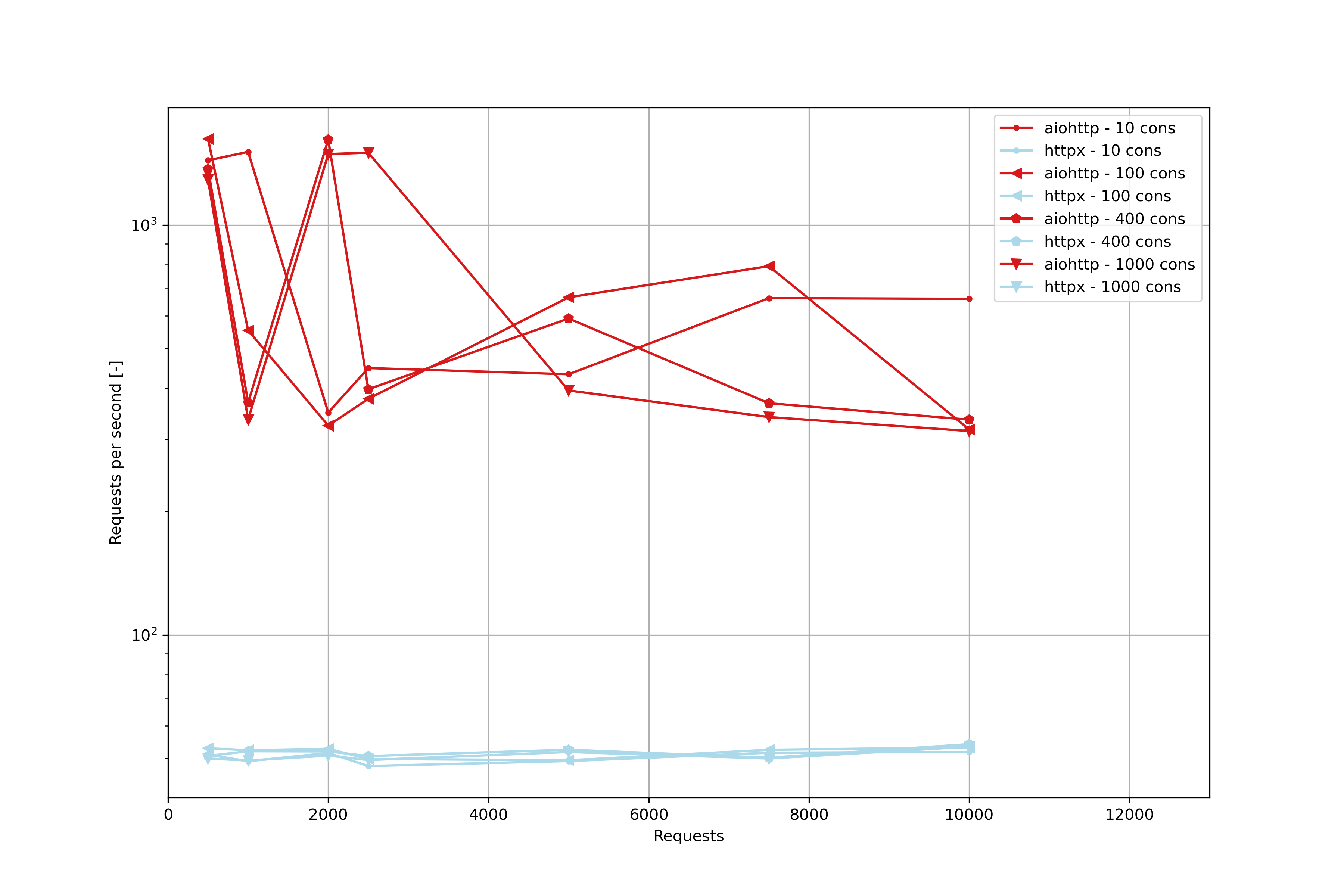

Apparently, even without http/2 enabled latencies and requests per second are a lot worse if httpx is used.

Therefore, use aiohttp ;).

Benchmark scripts

Python server script:

import os

import sys

import uuid

import aiohttp

from fastapi import FastAPI, status

import httpx

from pydantic import BaseModel

import uvicorn

REQ_ENDPOINT = os.getenv("REQUEST_URL", None)

if REQ_ENDPOINT is None:

sys.exit(1)

else:

REQ_ENDPOINT += "request_response/"

app = FastAPI()

class RandomResp(BaseModel):

message: str

class ResponsePayload(BaseModel):

status: int

message: str

def get_random_str() -> str:

return str(uuid.uuid4())

@app.get("/request_response/")

async def req_resp() -> RandomResp:

return RandomResp(message=get_random_str())

@app.get("/send_request/httpx/")

async def send_request_httpx() -> ResponsePayload:

"""

http post request to an endpoint using httpx

"""

resp_status: int = 1

msg: str = ''

async with httpx.AsyncClient() as client:

resp = await client.get(REQ_ENDPOINT)

if resp.status_code == status.HTTP_200_OK:

resp_status = 1

resp_loaded = resp.json()

msg = resp_loaded["message"]

return ResponsePayload(

status=resp_status,

message=msg

)

@app.get("/send_request/aiohttp/")

async def send_request_aiohttp() -> ResponsePayload:

"""

http post request to an endpoint using aiohttp

"""

resp_status: int = 1

msg: str = ''

async with aiohttp.ClientSession() as session:

async with session.get(REQ_ENDPOINT) as resp:

if resp.status == status.HTTP_200_OK:

resp_status = 1

data = await resp.json()

msg = data["message"]

return ResponsePayload(

status=resp_status,

message=msg

)

if __name__ == "__main__":

uvicorn.run(

"server:app",

reload=False,

workers=1,

host=os.getenv("API_HOST", None),

port=int(os.getenv("API_PORT", None)),

loop="uvloop",

log_level="error"

)

Run two versions of this server so that one handles the input requests and the other the requests forwarded.

Benchmark script:

I used oha to generate http loads

#!/bin/bash

requests=(500 1000 2000 2500 5000 7500 10000)

connections=(2 10 20 25 50 100 200 400 600 800 1000)

endpoints=("httpx" "aiohttp")

for lib in ${endpoints[*]}

do

for n in ${requests[*]}

do

for c in ${connections[*]}

do

printf ${n}_${c}_${lib}"\n"

oha --no-tui -j -n ${n} -c ${c} http://0.0.0.0:8000/send_request/${lib}/ > ${lib}_${n}_${c}.json

done

done

done