last update 2023-03-25:

At a readers request I looked at performance differences between Python and C++ if resized images are pre-allocated.

Contents

- Introduction

- Rotating Images

- Benchmark Results

- Benchmark Results (pre-allocated mats)

- Other Options for Image Rotation

Introduction

Is rotation that much needed in practice - no. Except for computational stabilization of videos, rotation is usually only required if cameras are mounted up-side-down or rotated by 90 deg because cameras usually have mounting sockets on one side only. In such a case rotation this is ideally performed on a camera (modified read-out/data transfer) so it does require zero compute cost on the machine a computer vision pipeline is processing a camera feed. This is very common when using industrial cameras and even higher quality cameras of the low-budget field.

Rotating Images

Normally, we would expect that simply a rotation matrix is applied to an image. However, OpenCV uses a transformation matrix that enables general affine transformations (cv::warpAffine) which includes rescaling. Therefore, the transformation matrix looks like this

where

\[\alpha = scale \cdot cos\theta\] \[\beta = scale \cdot sin\theta\]This approach allows to apply any affine transformation including pure translation (\( \theta = 0 \)). The transformation matrix (rotation matrix) can be generated easily using cv::getRotationMatrix2D as shown in the examples below. There exists functionality to reverse this matrix as well. This function is called cv::invertAffineTransform.

Positive values for rotation angles lead to counter clockwise (CCW) rotation. The image size remains identical and areas outside the bounds of an image are cut of and other areas with no data available from the original image are black. An example using rotation angles of [0, 45, 90, 135, 180, 225, 270, 315, 360] is shown below.

(Rotation of 180 degrees actually causes a small black border)

As mentioned in the introduction, the most common rotation in practice is a rotation by 180 degrees. If we work with video recordings instead of live camera feeds, then this needs to be on the computer while reading from such files. Using cv::flip is a computational cheaper alternative to applying affine transformations.

Both approaches work with cv::cuda on NVIDIA GPUs as well (see below).

CPU

// C++

const int iterations = 100;

const int min_size = 128;

const int max_size = 16385;

const double resize_val = 1.0;

const std::vector<int> rotation_angles =

{45, 90, 135, 180, 225, 270, 315};

std::vector<cv::Size> resolutions_cv;

int img_size = min_size;

while (img_size < max_size+1)

{

resolutions_cv.push_back(cv::Size(img_size,img_size));

img_size *= 2;

}

// [...] (for loops, resizing images, etc.)

// flip example

cv::flip(img_resized, img_rotated, -1);

// rotation example

cv::Point2f center_coord(static_cast<float>(img_resized.cols - 1) / 2,

static_cast<float>(img_resized.rows - 1) / 2);

cv::Mat rotation_matix = cv::getRotationMatrix2D(center_coord,

static_cast<double>(angle),

resize_val);

// applying affine transformation (resolution is of class cv::Size)

cv::warpAffine(img_resized, img_rotated, rotation_matix, resolution);

# Python

iterations = 100

min_size = 128

max_size = 16385

resize_val = 1.0

resolutions_cv = []

img_size = min_size

while (img_size < max_size+1):

resolutions_cv.append((img_size, img_size))

img_size *= 2

rotation_angles = [45, 90, 135, 180, 225, 270, 315]

# [...] (for loops, resizing images, etc.)

# flip example

img_rotated = cv2.flip(img_resized, -1)

# rotation example

(h, w) = img_resized.shape[:2]

center = (w//2, h//2)

rotation_matrix = cv2.getRotationMatrix2D(center,

angle,

resize_val)

img_rotated = cv2.warpAffine(img_resized,

rotation_matrix,

resolution)

GPU

The generation of the rotation matrix remains the same:

// C++

// flip example

cv::cuda::flip(g_img_resized, g_img_rotated, -1);

// rotate example

cv::Point2f center_coord(static_cast<float>(g_img_resized.cols - 1) / 2,

static_cast<float>(g_img_resized.rows - 1) / 2);

cv::Mat rotation_matix = getRotationMatrix2D(center_coord,

static_cast<double>(angle),

resize_val);

cv::cuda::warpAffine(g_img_resized, g_img_rotated, rotation_matix, resolution);

# Python

# flip example

g_img_rotated = cv2.cuda.flip(g_img_resized, -1)

# rotate example

g_img_rotated = cv2.cuda.warpAffine(g_img_resized,

rotation_matrix,

resolution)

Benchmark Results

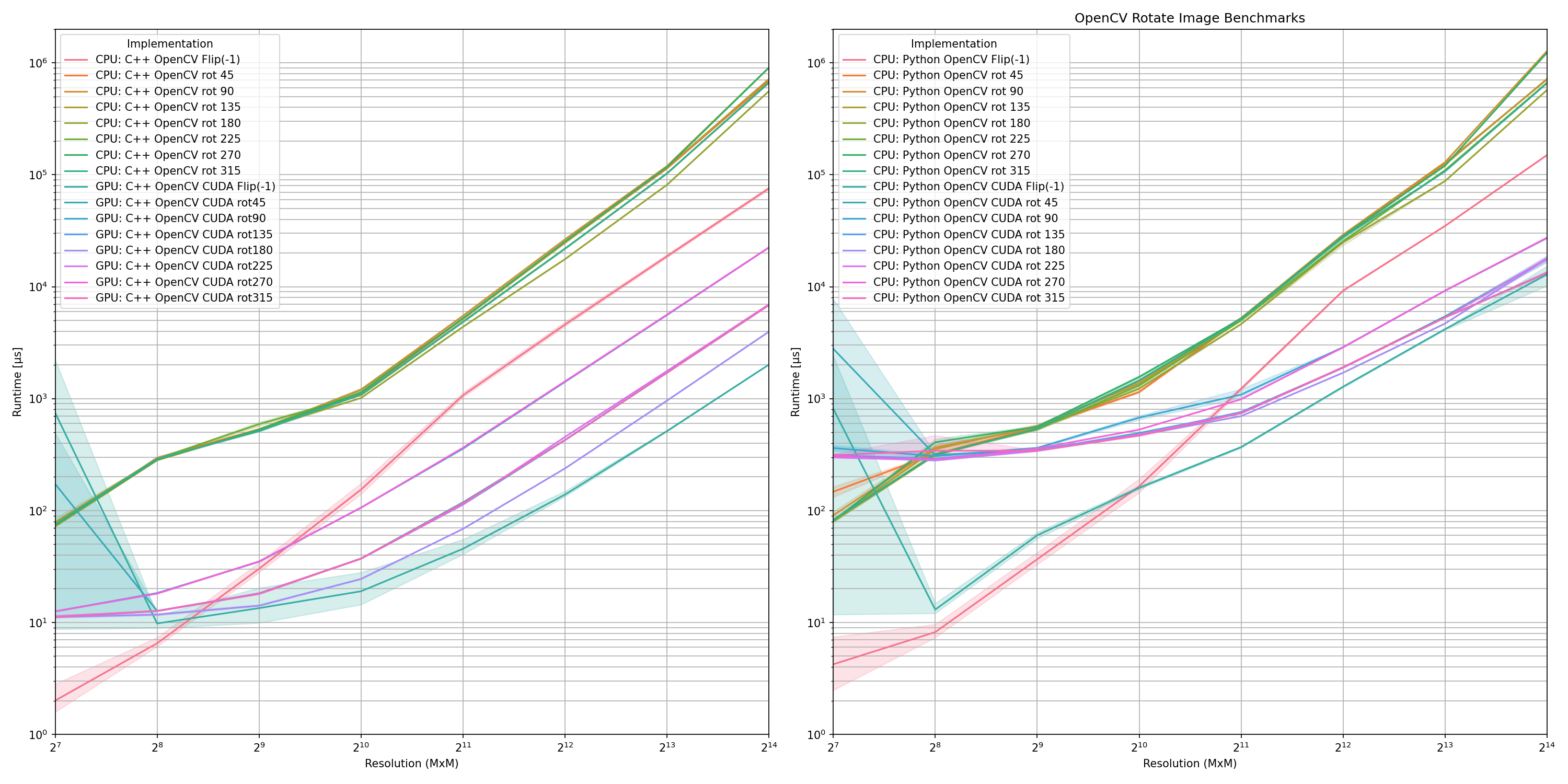

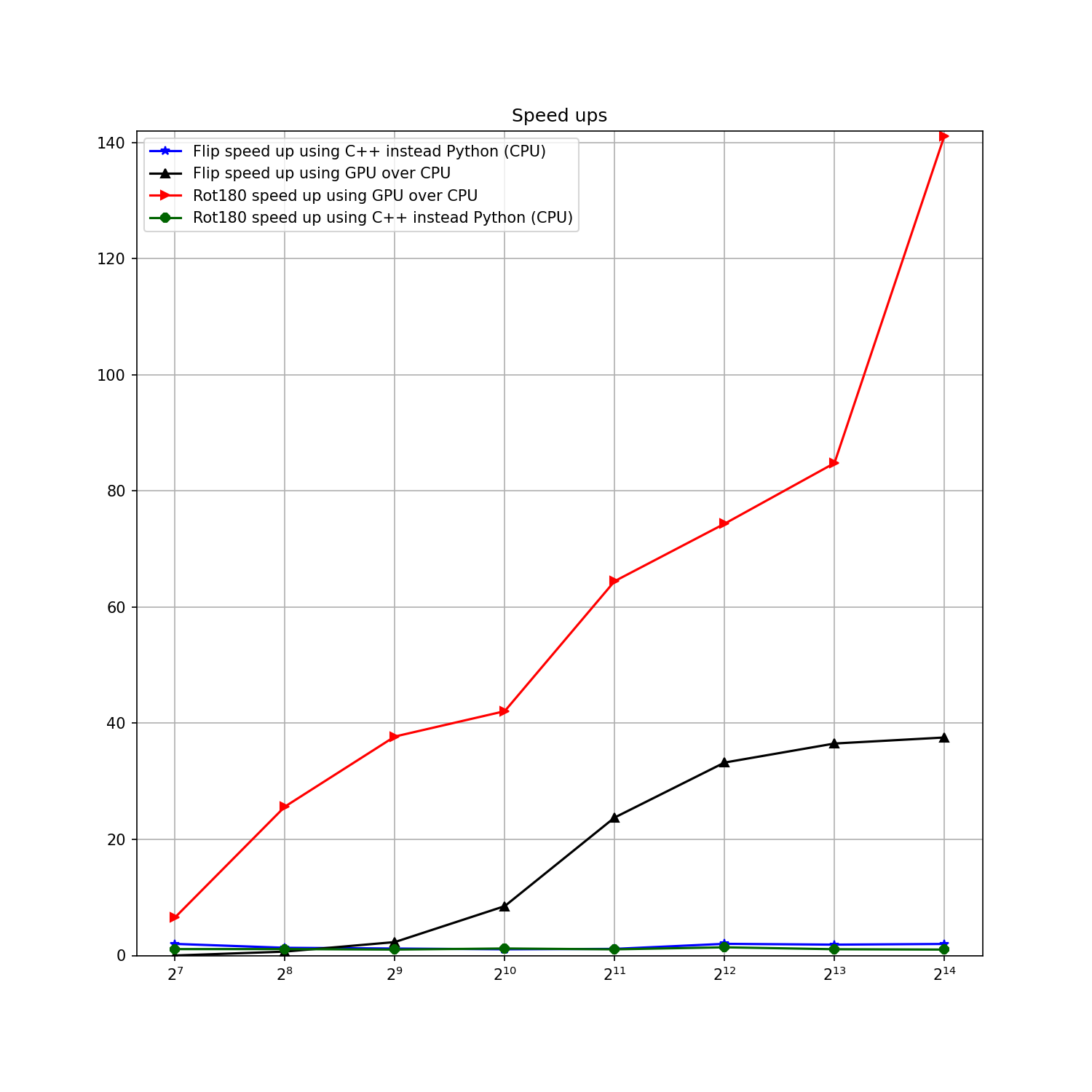

The benchmark show clearly that the C++ versions are faster than the Python versions and that cv::flip is significantly faster than rotating images by 180 degrees applying affine transformation. Like almost always, there are some jumps in the benchmark results which most likely are either caused by data transfer (to the GPU) or by reaching some cache limits which mean that memory (RAM) needs to be accessed instead of L1, L2 or L3 caches. Further it is visible that rotations others than 180 are significantly slower. We can assume that this is caused by checks if pixels are still within bounds after affine transformations are applied as the image size remains constant.

It seems like we’re getting a consistent speed-ups of 20% to 100% using C++ instead of Python. The larger the image we are processing the higher the speedup of using a GPU over CPUs.

Benchmark Results (pre-allocated mats)

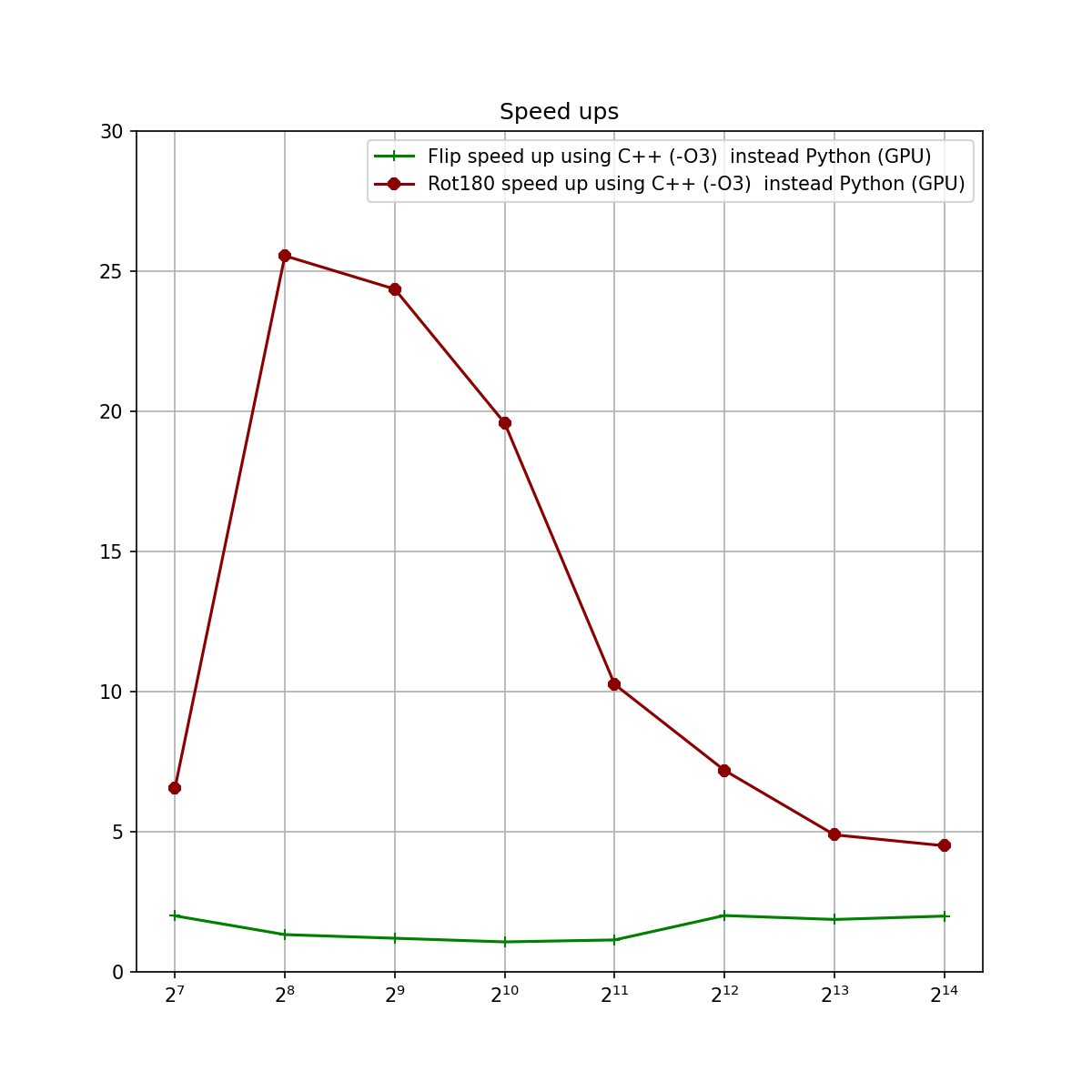

At a reader’s request, I’m publishing the source code but also add a variation to it and initialize the the output images for each transformation. NB! OpenCV 4.7 instead of OpenCV 4.6 is used. In the original version the output images were not initialized in their respective C++ versions either. The version above compiles C++ code with -O3 optimizations.

Results

The results vary a bit between image sizes. However, the -O3 results seem to look almost identical independent of what notebook or workstation I choose. Running it containerized or natively also seems to have no impact on relative performance between C++ and Python. -O0 results do vary a bit between machines.

When it comes to using the GPU, things look a bit clearer with respect to C++ gains.

Source Code

import time

import cv2

import numpy as np

def write_results(output_file,

implementation_str: str,

durations: list,

resolution: tuple):

for duration in durations:

out_str = implementation_str + str(resolution[0]) + ',' +\

str(duration) + '\n'

output_file.write(out_str)

output_file.flush()

def main():

print('=' * 72)

iterations = 100

min_size = 128

max_size = 16385

div_half = 2

resize_val = 1.0

output_file = open('./results_rotate_ocv_py_prealloc.csv', 'w')

output_file.write('Implementation,Resolution,Runtime [s]\n')

img_orig = cv2.imread('./pattern.png', cv2.IMREAD_COLOR)

resolutions_cv = []

img_size = min_size

while (img_size < max_size+1):

resolutions_cv.append((img_size, img_size))

img_size *= 2

rotation_angles = [45, 90, 135, 180, 225, 270, 315]

print('Benchmarking CPU implementations')

for resolution in resolutions_cv:

print("Benchmarking square image of resolution {}".format(

resolution))

durations = []

img_resized = cv2.resize(img_orig, resolution)

# pre-allocated version

img_rotated = np.zeros_like(img_resized)

# other versions uses img_rotated = cv2.flip... etc.

for _ in range(iterations):

tic = time.time()

cv2.flip(img_resized, -1, img_rotated)

toc = time.time()

run_time = toc - tic

durations.append(run_time)

implementation_str = 'CPU: Python OpenCV Flip(-1),'

write_results(output_file,

implementation_str,

durations,

resolution)

(h, w) = img_resized.shape[:2]

center = (w//2, h//2)

for angle in rotation_angles:

rotation_matrix = cv2.getRotationMatrix2D(center,

angle,

resize_val)

durations = []

for _ in range(iterations):

tic = time.time()

cv2.warpAffine(img_resized,

rotation_matrix,

resolution,

dst=img_rotated)

toc = time.time()

run_time = toc - tic

durations.append(run_time)

implementation_str = 'CPU: Python OpenCV rot ' +\

str(angle) + ','

write_results(output_file,

implementation_str,

durations,

resolution)

print('Benchmarking GPU implementations')

for resolution in resolutions_cv:

print("Benchmarking square image of resolution {}".format(

resolution))

durations = []

img_resized = cv2.resize(img_orig, resolution)

g_img_resized = cv2.cuda_GpuMat(img_resized)

# pre-allocated version

g_img_rotated = g_img_resized.clone()

for _ in range(iterations):

tic = time.time()

cv2.cuda.flip(g_img_resized, -1, g_img_rotated)

toc = time.time()

run_time = toc - tic

durations.append(run_time)

implementation_str = 'GPU: Python OpenCV CUDA Flip(-1),'

write_results(output_file,

implementation_str,

durations,

resolution)

(h, w) = img_resized.shape[:2]

center = (w//2, h//2)

for angle in rotation_angles:

rotation_matrix = cv2.getRotationMatrix2D(center,

angle,

resize_val)

durations = []

for _ in range(iterations):

tic = time.time()

cv2.cuda.warpAffine(g_img_resized,

rotation_matrix,

resolution,

dst=g_img_rotated)

toc = time.time()

run_time = toc - tic

durations.append(run_time)

implementation_str = 'GPU: Python OpenCV CUDA rot ' +\

str(angle) + ','

write_results(output_file,

implementation_str,

durations,

resolution)

print('=' * 72)

if __name__ == '__main__':

main()

#include <cmath>

#include <fstream>

#include <iostream>

#include <string>

#include <opencv2/opencv.hpp>

#include <opencv2/core/cuda.hpp>

#include <opencv2/cudaarithm.hpp>

#include <opencv2/cudawarping.hpp>

int pow_int(int base, int power)

{

int res = 1;

if (power == 0) {

res = 1;

} else if (power == 1) {

res = base;

} else {

for (int i = power; i > 1; --i)

{

res *= base;

}

}

return res;

}

void write_results(std::ofstream &output_file,

std::string &implementation_str,

std::vector<uint64_t> &durations,

cv::Size &resolution)

{

for (auto duration: durations)

{

std::string str_to_write = implementation_str +

std::to_string(resolution.width) + "," +

std::to_string(duration) + "\n";

output_file << str_to_write;

}

output_file.flush();

}

int main() {

const int header_length = 72;

std::cout << std::string(header_length, '=') << std::endl;

const int iterations = 100;

const int min_size = 128;

const int max_size = 16385;

const float half = 2;

const double resize_val = 1.0;

const std::string results_fn("./results_rotate_ocv_cpp_prealloc.csv");

std::ofstream output_file(results_fn);

if (!output_file) {

std::cout << "Can't open log file. Aborting benchmark!" << std::endl;

return 1;

}

output_file << "Implementation,Resolution,Runtime [ns]\n";

cv::Mat img_orig = cv::imread("../pattern.png", cv::IMREAD_COLOR);

std::vector<cv::Size> resolutions_cv;

const std::vector<int> rotation_angles =

{45, 90, 135, 180, 225, 270, 315};

int img_size = min_size;

while (img_size < max_size+1)

{

resolutions_cv.push_back(cv::Size(img_size,img_size));

img_size *= 2;

}

// CPU

std::cout << "Benchmarking CPU implementations" << std::endl;

auto tic = std::chrono::high_resolution_clock::now();

auto toc = std::chrono::high_resolution_clock::now();

for (auto resolution: resolutions_cv)

{

std::cout << "Benchmarking square image of resultion " << std::to_string(resolution.width) << std::endl;

std::vector<uint64_t> durations;

durations.reserve(iterations);

cv::Mat img_resized(resolution, CV_8UC3);

// benchmarks without pre-allocated output images used this:

// cv::Mat img_rotated;

// instead of

cv::Mat img_rotated(resolution, CV_8UC3);

cv::resize(img_orig, img_resized, resolution);

uint64_t run_time = 0;

for (int i = 0; i < iterations; ++i)

{

tic = std::chrono::high_resolution_clock::now();

cv::flip(img_resized, img_rotated, -1);

toc = std::chrono::high_resolution_clock::now();

run_time = std::chrono::duration_cast<std::chrono::nanoseconds>

(toc - tic).count();

durations.push_back(run_time);

}

std::string implementation_str = "CPU: C++ OpenCV Flip(-1),";

write_results(output_file, implementation_str, durations, resolution);

cv::Point2f center_coord(static_cast<float>(img_resized.cols - 1) / half,

static_cast<float>(img_resized.rows - 1) / half);

for (auto angle: rotation_angles)

{

cv::Mat rotation_matix = cv::getRotationMatrix2D(center_coord,

static_cast<double>(angle),

resize_val);

durations.clear();

for (int i = 0; i < iterations; ++i)

{

tic = std::chrono::high_resolution_clock::now();

cv::warpAffine(img_resized, img_rotated, rotation_matix, resolution);

toc = std::chrono::high_resolution_clock::now();

run_time = std::chrono::duration_cast<std::chrono::nanoseconds>

(toc - tic).count();

durations.push_back(run_time);

}

implementation_str = "CPU: C++ OpenCV rot "+std::to_string(angle)+",";

write_results(output_file, implementation_str, durations, resolution);

}

}

// GPU

std::cout << "Benchmarking GPU implementations" << std::endl;

for (auto resolution: resolutions_cv)

{

std::cout << "Benchmarking square image of resultion " << std::to_string(resolution.width) << std::endl;

std::vector<uint64_t> durations;

durations.reserve(iterations);

cv::Mat img_resized(resolution, CV_8UC3);

cv::resize(img_orig, img_resized, resolution);

cv::cuda::GpuMat g_img_resized(img_resized);

// benchmarks without pre-allocated output images used this:

// cv::cuda::GpuMat g_img_rotated;

// instead of

cv::cuda::GpuMat g_img_rotated(resolution, CV_8UC3);

uint64_t run_time = 0;

for (int i = 0; i < iterations; ++i)

{

tic = std::chrono::high_resolution_clock::now();

cv::cuda::flip(g_img_resized, g_img_rotated, -1);

toc = std::chrono::high_resolution_clock::now();

run_time = std::chrono::duration_cast<std::chrono::nanoseconds>

(toc - tic).count();

durations.push_back(run_time);

}

std::string implementation_str = "GPU: C++ OpenCV CUDA Flip(-1),";

write_results(output_file, implementation_str, durations, resolution);

cv::Point2f center_coord(static_cast<float>(g_img_resized.cols - 1) / half,

static_cast<float>(g_img_resized.rows - 1) / half);

for (auto angle: rotation_angles)

{

cv::Mat rotation_matix = getRotationMatrix2D(center_coord,

static_cast<double>(angle),

resize_val);

durations.clear();

for (int i = 0; i < iterations; ++i)

{

tic = std::chrono::high_resolution_clock::now();

cv::cuda::warpAffine(g_img_resized, g_img_rotated, rotation_matix, resolution);

toc = std::chrono::high_resolution_clock::now();

run_time = std::chrono::duration_cast<std::chrono::nanoseconds>

(toc - tic).count();

durations.push_back(run_time);

}

implementation_str = "GPU: C++ OpenCV CUDA rot"+std::to_string(angle)+",";

write_results(output_file, implementation_str, durations, resolution);

}

}

output_file.close();

std::cout << std::string(header_length, '=') << std::endl;

return 0;

}

# cmake file

cmake_minimum_required(VERSION 3.8)

set(CMAKE_CXX_COMPILER "clang++")

SET(CMAKE_CXX_FLAGS "-O0")

SET(CMAKE_C_FLAGS "-O0")

set(CMAKE_CXX_STANDARD 20)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

set(CMAKE_CXX_CLANG_TIDY

clang-tidy;

-header-filter=.;

-checks=-*,clang-analyzer-*,cppcoreguidelines-*,google-*;

-warnings-as-errors=*;)

#set(CMAKE_CXX_CPPCHECK "cppcheck")

project(opencv_rotate_imgs_cpp)

find_package(OpenCV REQUIRED)

find_package(CUDA REQUIRED)

include_directories(

${OpenCV_INCLUDE_DIRS},

${CUDA_INCLUDE_DIRS}

)

add_executable(

opencv_rotate_imgs_cpp_prealloc

opencv_rotate_imgs_cpp_prealloc.cc

)

target_link_libraries(

opencv_rotate_imgs_cpp_prealloc

${OpenCV_LIBS}

${CUDA_LIBS}

)

Other Options for Image Rotation

Other libraries as OpenCV could be used as well. Some libraries may actually contain handcrafted functions to make a rotations of [0,90,180,270] degrees much faster than applying a rotation matrix. Some examples of other libraries providing image rotation are: