Contents

General remarks

“How to read, write and display” images or frames from video sources is considered the “Hello, World!” of OpenCV. However, there is much more to it. This is certainly not a “Hello, World!”s and neither a complete in-depth explanation of every aspect of opencv2/imgcodecs.hpp or opencv2/videoio.hpp . It is the result of working with a large subset of OpenCV’s I/O components and reading a lot of the underlying source code due to a lack of proper explanations and tutorials.

The examples contain C++ and Python versions. Unlike many others, I do consider using using namespace std; and using namespace cv; a bad habit as it makes debugging a bit trickier and not using namespaces makes it more clear where stuff comes from! Hence all examples will use proper std:: and cv::. If there is no such namespace used and a class/function is not defined locally, then it originates from the C library.

For those not familiar with C++ and compiling source I recommend using CMake. A CMakeLists.txt for a project using OpenCV would look like this:

cmake_minimum_required(VERSION 3.12)

set(CMAKE_CXX_COMPILER "clang++") # or g++

set(CMAKE_CXX_FLAGS "-O3")

set(CMAKE_C_FLAGS "-O3")

# min. standard required for OpenCV is C++11

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

# options to do some code checks

# set(CMAKE_CXX_CPPCHECK "cppcheck")

# OR

# set(CMAKE_CXX_CLANG_TIDY

# clang-tidy;

# -header-filter=.;

# -checks=-*,clang-analyzer-*,cppcoreguidelines-*,google-*;

# -warnings-as-errors=*;)

find_package(OpenCV REQURIED)

include_directories(

${OpenCV_INCLUDE_DIRS}

)

add_executable(

test

test.cc

)

target_link_libraries(

test

${OpenCV_LIBS}

)

NB!: OpenCV seems to be compliant with C++11 whereas e.g. PyTorch (libtorch) requires C++14 to build it from source but the shared library seems to be compliant with C++11. Keep that in mind when using OpenCV deep learning pipelines. I highly recommend using the lasted standard if possible to keep the source code somewhat modern.

Image I/O

A fundamental task in any computer vision pipeline is reading and writing images (frames). Depending on the source (e.g. industrial cameras) other SDKs might be used but most common scenarios are covered by OpenCV. The important classes and functions for image I/O require opencv2/core.hpp and opencv2/imgcodecs.hpp.

Technically speaking, we can distinguish reading images in two tasks:

- reading an image file (some binary or byte representation)

- decoding and deserializing the image (if encoded in some format) and moving the data into a matrix structure (representation)

Saving an image that is stored as a matrix happens accordingly:

- encoding the image and serializing it

- writing the image to a file

Loading Images

In order to read an (encoded) image from a file to a cv::Mat, we need to make sure that the image available in one of the formats supported - which also depends on the build process and other libraries available on the system a pipeline is deployed on.

Currently, the following file formats are supported (source):

Windows bitmaps - *.bmp, *.dib (always supported)

JPEG files - *.jpeg, *.jpg, *.jpe (see the Note section)

JPEG 2000 files - *.jp2 (see the Note section)

Portable Network Graphics - *.png (see the Note section)

WebP - *.webp (see the Note section)

Portable image format - *.pbm, *.pgm, *.ppm *.pxm, *.pnm (always supported)

PFM files - *.pfm (see the Note section)

Sun rasters - *.sr, *.ras (always supported)

TIFF files - *.tiff, *.tif (see the Note section)

OpenEXR Image files - *.exr (see the Note section)

Radiance HDR - *.hdr, *.pic (always supported)

Raster and Vector geospatial data supported by GDAL (see the Note section)

Apparently, we can check if the image can be decoded using cv::haveImageReader.

NB: It seems like OpenCV supports only CPU implementations of decoders by default. If we want to use e.g. a GPU or FPGA, we may need a custom function for it, or if supported, use GStreamer via the cv::VideoCapture module (see below).

It is important to note that images are converted to CV_8UC3 (8 Bit BGR) by default if no specific read mode is selected. ImreadModes help us to avoid throwing away 16 Bit data, transparency or simply reduce overhead by reading grayscale images as grayscale without the need to convert them (COLOR_BGR2GRAY). Some handy cv::imread Modes:

IMREAD_LOAD_GDALfor GeoTiffsIMREAD_ANYCOLORfor more than 8 bit channel ranges (e.g. for medical scans)IMREAD_UNCHANGEDfor reading transparent images and keeping floating point numbers in imagesIMREAD_GRAYSCALEfor reading grayscale images

That being said, images can be loaded using cv::imread:

// C++

cv::Mat img = cv::imread("filename.png", cv::IMREAD_ANYCOLOR);

if (img.empty()) {

std::cout << "Reading image failed" << std::endl;

return 1; // or error handling ;))

}

// do someting with img

# Python

img = cv2.imread('filename.png', cv2.IMREAD_ANYCOLOR)

if img is None:

print('Reading image failed')

sys.exit(1) # or error handling ;))

# do something with img

Multipage images can be loaded using cv::imreadmulti but that seems to be exotic nowadays. cv::imcount can count the number of images in one document (just in case).

Saving Images

Writing or saving images is similarly simple or difficult as reading them.

Standard Image Formats

NB: It seems like OpenCV supports only CPU implementations of encoders by default. If we want to use e.g. a GPU or FPGA, we may need a custom function for it, or if supported, use GStreamer via the cv::VideoWriter module (see below).

Similar to ImreadModes, there exist ImwriteFlags to specify how an image is saved:

// C++

// set encoder settings

std::vector<int> encoder_settings = {cv::IMWRITE_JPEG_QUALITY, 90, cv::IMWRITE_JPEG_PROGRESSIVE, 1};

// saving the image returns a value of type bool

// indicating if the image was saved successfully

bool write_success = cv::imwrite("out.jpeg", img, encoder_settings);

std::cout << write_success << std::endl;

# Python

# set encoder settings

encoder_settings = [cv2.IMWRITE_JPEG_QUALITY, 90, cv2.IMWRITE_JPEG_PROGRESSIVE, 1]

# saving the image returns a value of type bool

# indicating if the image was saved successfully

write_success = cv2.imwrite('out.jpeg', img, encoder_settings)

print(write_success)

Binary Formats

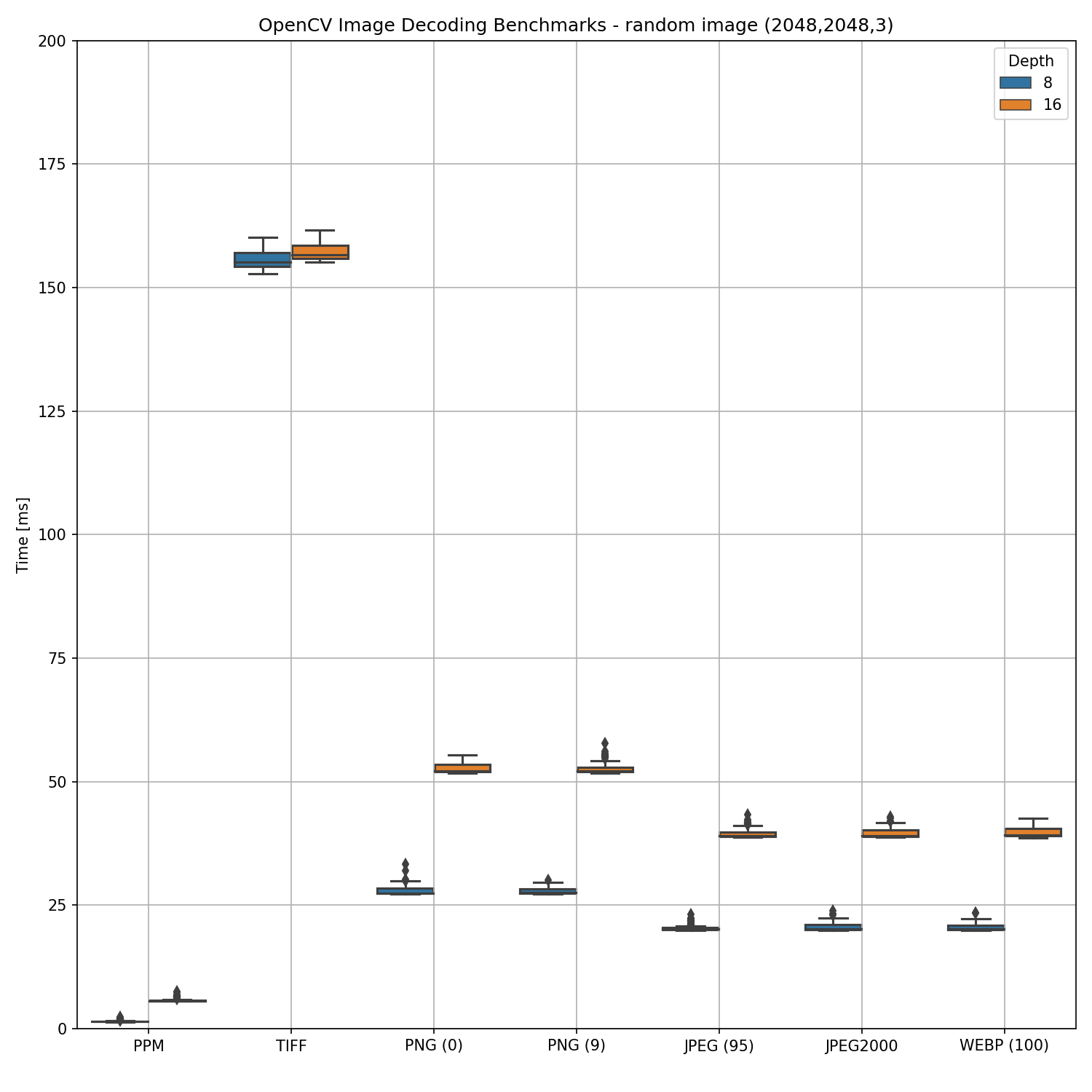

When it comes to storing images, we have to think about performance (see encoding section for some benchmarks). If we have a stream of incoming frames from e.g. industrial cameras, then we can’t save them as a video stream because in many applications loss-less storage is required for “replay pipelines” (incl. legal resons) and data gathering. We end up with three options:

- 1) evaluating if the “portable image format” (

.ppm,.pgm, etc.) is suitable - 2) dump images in binary form to disk

- 3) build a custom file storage solution (which perhaps logs other things but frames as well)

3) is much more complicated and project specific. Therefore, it can be neglected in this general purpose context.

This means that we are left with the “dump binary images” to disk option. First, the disk we’re dumping it to needs to be sufficiently fast. High quality NVMe M.2 storage (PCIe-{3,4}) should be fast enough to handle multiple industrial cameras simulatniously depending on bitrates.

OpenCV offers cv::FileStorage as a general purpose storage for any OpenCV object but in this context we could consider it as not well suited.

If we use Python, then the image itself is available as a NumPy array which means that we can simply use np.save:

# Python

np.save('filename.npy', img, allow_pickle=False, fix_imports=False)

img_loaded = np.load('filename.npy', mmap_mode=None, allow_pickle=False, fix_imports=False)

When using C++ the situation is less straight forward. Perhaps the best solution is the one posted on stackoverflow that stores and loads cv::Mat as binary file properly with high performance.

Decoding Images

Depending on what project we are working, we may receive encoded images as input (e.g. from a third party library) or they are read from a binary using normal file I/0 and not cv::imread. In such a case only the decoding part is left. The straight forward approach to this is using cv::imdecode.

NB: It seems like OpenCV supports only CPU implementations of decoders by default. If we want to use e.g. a GPU or FPGA, we may need a custom function for it, or if supported, use GStreamer via the cv::VideoCapture module.

// CPP

std::vector<uchar> mem_buffer_enc_img // contains encoded image

cv::Mat dec_image;

dec_image = cv::imdecode(mem_buffer_enc_img, cv::IMREAD_ANYCOLOR);

# Python

# type(img_buffer): np.array, dtype: np.uint8

dec_img = cv2.imdecode(img_buffer, cv2.IMREAD_ANYCOLOR)

Sometimes images are stored as (encoded) base64 strings in databases.

When using python the base64 decoding is straight forward as base64 is part of the Python standard library:

# python

import base64

img_b64_enc = img_b64_enc_str.encode()

img_enc = base64.b64decode(img_b64_enc)

img_buffer = np.frombuffer(img_enc, np.uint8)

dec_img = cv2.imdecode(img_buffer, cv2.IMREAD_ANYCOLOR)

The situation is a bit more tricky when using C++ as base64 decoding is not part of the C++ standard library. base64, boost, cppcodec and Qt offer some options or we simply write a base64 encoder/decoder ourselves. Which library to use probably depends on personal preferences and project requirements.

// C++ Qt example

std::string encoded_img_b64_str; //contains the base64 encoded data as string

QByteArray encoded_img = QByteArray::fromBase64(encoded_img_b64_str.c_str());

std::vector<uchar> out_buffer(encoded_img.begin(), encoded_img.end());

cv::Mat dec_img = cv::imdecode(out_buffer, cv::IMREAD_ANYCOLOR);

Encoding Images

// C++

// create a random dummy image

cv::Mat random_image(2048,2048, CV_8UC3);

cv::randu(random_image, cv::Scalar(0,0,0), cv::Scalar(255,255,255));

// set encoder settings

std::vector<int> encoder_settings = {cv::IMWRITE_JPEG_QUALITY, 90, cv::IMWRITE_JPEG_PROGRESSIVE, 1};

// create buffer to store encoded image

std::vector<uchar> mem_buffer; //std::vector<uint8_t> would work as well ;)

// run encoding

cv::imencode(".jpeg", random_image, mem_buffer, encoder_settings);

# Python

# create random dummy image

random_image = np.random.randint(0,256,(2048,2048,3),dtype=np.uint8)

# set encoder settings

encoder_settings = [cv2.IMWRITE_JPEG_QUALITY, 90, cv2.IMWRITE_JPEG_PROGRESSIVE, 1]

# cv2.imencode returns a tuple

# first element states success of operation {True,False}

encoded_img = cv2.imencode('.jpeg', random_image, encoder_settings)[1]

If we want to embed images into html files and the image in question is available as a file already, then we need the image in base64 format. To convert an image we can use the following command:

$ base64 -w 0 input_image.png > input_image.base64

Storing base64 encoded images is sometimes done in databases as well. In that case the image is stored as TEXT (e.g. PostgreSQL) and not as binary. One of the ideas behind this is that an image could be embedded into a html document directly. This would look like this:

<img src="data:image/jpg;base64,ENCODED_IMG_STRING">

When using python the base64 encoding is straight forward as base64 is part of the Python standard library:

# python

import base64

encoded_img_b64 = base64.b64encode(encoded_img).decode()

The situation is a bit more tricky when using C++ as base64 encoding is not part of the C++ standard library. base64, boost, cppcodec and Qt offer some options or we simply write a base64 encoder/decoder ourselves. Which library to use probably depends on personal preferences and project requirements.

// C++ Qt example

QByteArray img_enc_as_byte_array = QByteArray::fromRawData((const char*)mem_buffer.data(), mem_buffer.size());

QString encoded_img_b64_qt = img_enc_as_byte_array.toBase64();

// converting it to a standard library string

std::string encoded_img_b64 = encoded_img_b64_qt.toStdString();

Video I/O

Video I/O with OpenCV can be a bit tricky but we don’t have to blame OpenCV for it but the way it is build/packaged. Therefore, it is important to make sure that e.g. GStreamer is supported in case we want to use it. OpenCV provides a handy function that gives us some insights regarding the build process and supported backends. We can simply print it to the terminal:

// C++

std::cout << cv::getBuildInformation() << std::endl;

# Python

print(cv2.getBuildInformation())

Capturing Frames from Videos

Most examples start with something like this:

// C++

#include <opencv2/core.hpp>

#include <opencv2/videoio.hpp>

int main (){

cv::Mat frame;

cv::VideoCapture cap(0);

// capture loop, do something

return 0;

# python

import cv2

def main():

cap = cv2.VideoCapture(0)

# capture loop, do something

It almost doesn’t matter if we’re writing production code or just a quick prototype, we have no idea what backend is used and depending how we install OpenCV, we don’t even know with what backends it was compiled without checking it again. Consider initializing a VideoCapture instance without backend specification an ABSOLUTE NO-GO! It is significantly better to program a “Hello, World!” like this:

// C++

#include <opencv2/core.hpp>

#include <opencv2/videoio.hpp>

int main (){

cv::Mat frame;

cv::VideoCapture cap("/dev/video0", cv::CAP_V4L2);

// capture loop, do something

return 0;

# python

import cv2

def main():

cap = cv2.VideoCapture('/dev/video0', cv2.CAP_V4L2)

# capture loop, do something

This way we know exactly what backend we are using. Ultimately, this influences the input we can use and how to address the backend to change capture parameters.

cv::CAP_V4L2 is a less common backend as it basically supports USB webcams (v4l2) only. cv::CAP_FFMPEG is more common and default if OpenCV is installed via pip. When compiled manually or provided by the OS (e.g. ArchLinux), cv::CAP_GSTREAMER is a backend likely to be supported as well. cv::CAP_GSTREAMER is probably the most straight foward way to stream videos from an OpenCV pipeline (see Writing/Streaming Frames as Video).

When it comes to reading frames using cv::CAP_GSTREAMER, then we can utilize simple filenames (e.g. video_file.mp4) and let OpenCV take care of the rest or use proper gstreamer strings resulting in ! appsink. As this is no GStreamer tutorial, I’ll not cover any details here. Vairous GPU encoders vary in their properties etc. - just look them up as needed.

cv::VideoCapture exposes capture properties which can be accessed using cap.get(cv::CAP_PROP....). Some backends such as cv::CAP_V4L2 expose a few settings which can be used to e.g. select which codec, frame rate and resolution a USB camera should provide. They can be set using cap.set(cv::CAP_PROP...):

// C++

int codec = cv::VideoWriter::fourcc('M','J','P','G');

cv::VideoCapture cap("/dev/video0", cv::CAP_V4L);

cap.set(cv::CAP_PROP_FOURCC, codec);

cap.set(cv::CAP_PROP_FPS, 30);

cap.set(cv::CAP_PROP_FRAME_WIDTH, 1280);

cap.set(cv::CAP_PROP_FRAME_HEIGHT, 720);

# Python

codec = cv2.VideoWriter.fourcc('M','J', 'P','G')

cap = cv2.VideoCapture('/dev/video0', cv2.CAP_V4L)

cap.set(cv2.CAP_PROP_FOURCC, codec)

cap.set(cv2.CAP_PROP_FPS, 30)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 1280)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 720)

Unlike cv::CAP_V4L2, which exposes some properties,cv::CAP_FFMPEG, we can set some properties using environment variables. We can think about it as a hash map (key value storage) with keys and values separated by a ; and multiple keys value pairs separated by a |. There exist OPENCV_FFMPEG_WRITER_OPTIONS as well to specify e.g. the encoder (usually hardware acceleration) for writing a video to disk. NB!: These are FFMPEG options and details can be found in FFMPEG docs, not in OpenCV docs.

An example for reading rtsp streams using a tcp endpoint and decoding (h264) them using a NVIDIA GPU would look like this:

// C++

#include <cstdlib>

const char *ffmpeg_settings[] = "OPENCV_FFMPEG_CAPTURE_OPTIONS=rtsp_transport;tcp|video_codec;h264_cuvid";

putenv(ffmpeg_settings);

cv::VideoCapture cap("rtsp://some_address:port/stream_id", cv::CAP_FFMPEG);

# Python

os.environ['OPENCV_FFMPEG_CAPTURE_OPTIONS']='rtsp_transport;tcp|video_codec;h264_cuvid'

cap = cv2.VideoCapture('rtsp://some_address:port/stream_id', cv2.CAP_FFMPEG)

Reading frames is straight forward:

// C++

cv::VideoCapture cap("/dev/video0", cv::CAP_V4L2);

cv::Mat frame;

if (!cap.isOpened()) {

std::cout << "Frame capture failed/could not open device" << std::endl;

exit(1);

}

for (;;)

{

cap >> frame; // cap.read(frame) would work as well

if (frame.empty()) {

break;

}

// do something with the frame obtained

}

cap.release();

# Python

cap = cv2.VideoCapture('/dev/video0', cv2.CAP_V4L2)

if not cap.isOpened():

print('Frame capture failed/could not open device')

sys.exit(1)

while True:

ret, frame = cap.read()

if not ret:

break

# do something with the frame obtained

cap.release()

NB!: If we want to use industrial cameras, then the frame is usually provided by some SDK that allows for software or hardware triggers to capture frames (e.g. ) and we don’t have to used OpenCV’s intrinsic VideoCapture for that. If we want to use hardware decoding in a more fine-grainded way or build more complex pipelines with multiple streams etc, then we are better off using gstreamer or ffmpeg APIs directly and converting frames to cv::Mat instead of using cv::VideoCapture.

NB!: I experienced up to 30% higher frame rate with lower CPU utilization using C++ instead of Python, both single thread, to read the same video file (from both SATA and NVMe M.2 storage). Performance differences seem to depend on bitrate, codec and resolution.

Writing/Streaming Frames as Video

Saving a video to file can be easily done with cv::VideoWriter. NB!: most codecs require converting grayscale/binary images to BGR (COLOR_GRAY2BGR) and CV_8UC3 inputs by default although there exists an argument to specify if the input is color or not.

A simple example would look as follows:

// C++

// cv::VideoWriter out(args) would work as well instead of using open(args)

cv::VideoWriter out;

int codec = cv::VideoWriter::fourcc('M','J','P','G');

std::string fn_out = "./out.avi";

double fps = 60.0;

cv::Size frame_size(1920, 1080);

out.open(fn_out, cv::CAP_FFMPEG, codec, fps, frame_size);

if (!out.isOpened()) {

std::cout << "Could not open output file" << std::endl;

exit(1); // or error handling

}

for (;;)

{

// get and process a frame first

out.write(frame); // out << frame would work as well

}

out.release();

# Python

codec = cv2.VideoWriter.fourcc('M','J','P','G')

fn_out = './out.avi'

fps = 60.0

frame_size = (1920, 1080)

out = cv2.VideoWriter(fn_out, cv2.CAP_FFMPEG, codec, fps, frame_size)

if not out.isOpened():

print('Could not open output file')

sys.exit(1) # or error handling

while True:

# get and process a frame first

out.write(frame)

out.release()

Codec and container support for output files with OpenCV is a bit tricky. For anything meaningful I recommend setting proper environment variables for FFMPEG or use GStreamer with a properly defined pipeline.

Streaming videos from an OpenCV pipeline is a bit more tricky. It is handy to compile OpenCV with GStreamer as this allows deploying normal GStreamer pipelines. Encoding and streaming via a local UDP sink would look like this:

# Python

out = cv2.VideoWriter('appsrc ! \

videoconvert ! \

openh264enc bitrate=14000000 complexity=low gop-size=40 ! \

rtph264pay ! \

udpsink host=127.0.0.1 port=9000',

cv2.CAP_GSTREAMER,

0, # fourcc code

float(60), # fps

(frame_width,frame_height))

NB!: It seems like GStreamer pipelines have to be used without specifying GStreamer caps as this seems to be done internally by OpenCV. Passing 0 as fourcc is required as codec specification is handled by the GStreamer pipeline, however we have pass the frame rate and frame dimensions to cv::VideoWriter.

Note on GPU Decoding/Encoding

OpenCV provides rudimentary support to {de,en}code frames (cv::cuda::GpuMat) on NVIDIA GPUs without the need of copying frames from host to device or vice versa. Examples are provided on github: video_reader.cpp, video_writer.cpp. To compile opencv with cudacodec support, the Video Codec SDK is required. Don’t get your hopes up too high. Even if OpenCV is compiled properly, it may not work. This interface/functionality is somewhat unstable. If it doesn’t work out of the box, then stop wasting your time on this. If you deploy something on your own servers are fine with deploying software programmed in Python, then try NVIDIA’s VideoProcessingFramework. The underlying C++ libraries might be usable without Python as well. VPF integrates nicely with PyTorch. If you intend to decode frames on a GPU without host<->device copies in a production setting that needs to be programmed in C++ or so, then use GStreamer or FFMPEG or something more custom. Despite the initial development overhead it will save time and money in the long run.

Notes on Displaying Frames

It seems like some people, including myself, are insane enough to use OpenCV to display frames once in a while - not recommended for production systems! A standard example of “how to display a frame” is usually something like this:

// C++

// [...]

for (;;)

{

// [...]

cv::imshow("Window Title", frame_mat);

int key = cv::waitKey(1);

if (key == 27) {

break;

}

// [...]

cv::destroyAllWindows();

// [...]

#python

# [...]

while True:

# [...]

cv2.imshow('Window Title', frame_mat)

key = cv2.waitKey(1)

if key == 27:

break

# [...]

cv2.destroyAllWindows()

# [...]

However, how are cv::imshow and cv::waitKey related and what the fuck is cv::pollKey?

cv::waitKey returns the ANSII character. ESC equals 27 in the example above if ESC was pressed. Since cv::waitKey(1) is used, it will sleep for at least 1 ms which highlights a problem already. It has to wait until a time slice (CPU scheduling) is available. Sleeping for 1 ms practically means that we have to put it on a queue and wait until resources are available again. Since cv::waitKey(0) waits for an indefinite period until a keyboard input is received, we’re running into problems with real-time systems. It is important to know that drawing the image (GPU side) happens after the wait key.

The OpenCVdisplay/imshow capabilites offer some nice options to play around with and resize windows etc. However, due to the constraints outlined above I consider it a toy functionality for debugging but not for production use. A notable mention with respect to this is that if OpenCV is compiled with OpenCV support and the frame to be displayed next is of type cv::cuda::GpuMat, then no additional host <-> device copies happen and the frame is accessed directly on the GPU. It works by initializing a name window first using the cv::WINDOW_OPENGL WindowFlag.